Collecting data is the first step to generating predictions through your Machine Learning (ML) models. The accuracy of a model’s prediction depends on the type and quantity of data you provide.

At Vidora, we work closely with our customers to automate continuous streams of event data into ML models and predictions. The basis of these predictions come from events generated over time, where an event is defined as an object (e.g. a customer) completing an action (e.g. a purchase) at a point in time.

Continuous streams of event data are generally hard to use for ML because users and products constantly change. ML models have to be constantly updated with all the changes so that the predictions can take them into account. Continuous data needs to be processed and featurized in addition to needing good infrastructure and automation.

Cortex extends the value of these data sets by automatically ingesting these event streams of data, cleaning and featurizing it so that it’s in the proper format for machine learning. In this blog post we provide an overview of the types of datasets that Cortex supports, and how your business can import these datasets into the platform.

Event Data:

Events are the required building blocks of Cortex’s predictions. Cortex essentially requires three pieces of information to generate predictions:

- When the event occurred – e.g., Timestamp (in unix or ISO 8601 format)

- What type of event occurred – e.g., click / purchase

- Who completed the event – e.g., Object Id / User ID

Optionally, your events can also include any other information which contextualizes the object completing the event, or conditions of the event itself. This will help the ML model generate better predictions.

Basically, the more information you provide, the wider the set of predictions you can make, and the more accurate your predictions will be. Cortex is capable of predicting anything described in your events data. It builds features from the pieces of information included in the data.

Data Transfer:

At Vidora, we provide three standard methods for data to be continuously imported into Cortex.

-

Real-time APIs:

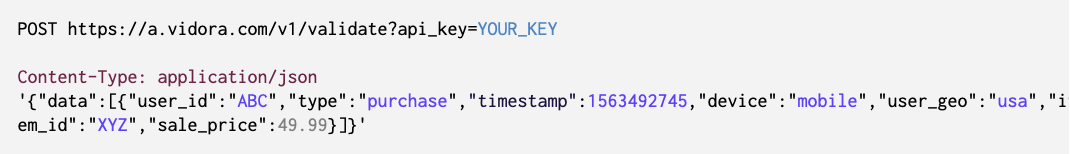

Cortex offers scalable APIs to stream live event data into Cortex as it occurs. These APIs can be deployed server-side or client-side (including integrations with Google Tag Manager and Tealium).

The example below shows a sample API POST which includes a customer purchase event. The event contains the three required fields, along with optional parameters which helps describe the user completing the event, the item that was purchased, and details of the event itself.

-

Batch Uploads:

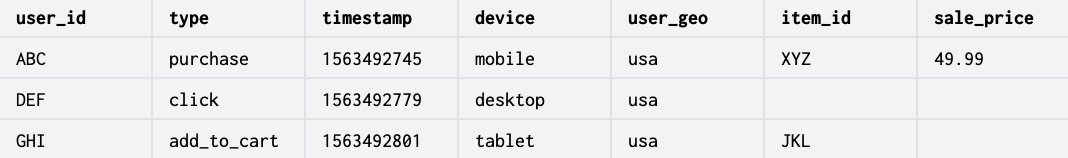

Alternatively, transfer batches of event data into Cortex from your data lake or analytics vendor. To use this method, schedule a recurring file upload (CSV or JSON preferred) into a directory hosted by either you or Vidora (e.g. AWS S3 bucket). Your Cortex account will automatically ingest the events contained inside any file uploaded to this directory.

The below example shows sample rows of a CSV file containing events from an eCommerce business. Note the three required fields, plus four optional fields.

-

Sandbox account:

If you wish to test Cortex via a one-time pipeline without having to set up a data integration, you can simply use this method to upload a csv file containing historical events data

We also support custom data ingestion for data that is more complex or scattered. The services we provide include the following:

- Transforming raw events data

- Merging events with other data sources such as item catalogue, user metadata, subscriptions table, etc.

Once we receive the data, Cortex automatically pre-processes the data and prepares it to build an ML model.

Summary:

It is challenging to work with massive data sets that continuously update in nature. Cortex simplifies the equation by connecting the hardest parts of ML into end-to-end pipelines that anyone in your business can run.

Cortex thrives on event data. The most essential pieces of information we require from event data is the Object ID, timestamp and type of event completed. This information along with any additional information helps contextualize the object completing the event, or conditions of the event itself.

We provide three standard methods to transfer data into Cortex. As soon as our servers receive the data, Cortex automates the entire data wrangling and machine learning process to help our customers achieve their goals. Contact our team to find out how ML can benefit your business!